StorSimple 8k series as a backup target?

19 December 2016

After a conference call with Microsoft Azure StorSimple product team, they explained:

- “The maximum recommended full backup size when using an 8100 as a primary backup target is 10TiB. The maximum recommended full backup size when using an 8600 as a primary backup target is 20TiB”

- “Backups will be written to array, such that they reside entirely within the local storage capacity”

Microsoft acknowledge the difficulty resulting from the maximum provisionable space being 200 TB on an 8100 device, which limits the ability to over-provision thin-provisioned tiered iSCSI volumes when expecting significant deduplication/compression savings with long term backup copy job Veeam files for example.

Conclusion

- When used as a primary backup target, StorSimple 8k devices are intended for SMB clients with backup files under 10TB/20TB for the 8100/8600 models respectively

- Compared to using an Azure A4 VM with attached disks (page blobs), StorSimple provides 7-22% cost savings over 5 years

15 December 2016

On 13 December 2016, Microsoft announced the support of using StorSimple 8k devices as a backup target. Many customers have asked for StorSimple to support this workload. StorSimple hybrid cloud storage iSCSI SAN features automated tiering at the block level from its SSD to SAS to Azure tiers. This makes it a perfect fit for Primary Data Set for unstructured data such as file shares. It also features cloud snapshots which provide the additional functionality of data backup and disaster recovery. That’s primary storage, secondary storage (short term backups), long term storage (multiyear retention), off site storage, and multi-site storage, all in one solution.

However, the above features that lend themselves handy to the primary data set/unstructured data pose significant difficulties when trying to use this device as a backup target, such as:

- Automated tiering: Many backup software packages (like Veeam) would do things like a forward incremental, synthetic full, backup copy job for long term retention. All of which would scan/access files that are typically dozens of TB each. This will cause the device to tier data to Azure and back to the local device in a way that slows things down to a crawl. DPM is even worse; specifically the way it allocates/controls volumes.

- The arbitrary maximum allocatable space for a device (200TB for an 8100 device for example), makes it practically impossible to use the device as backup target for long term retention.

- Example: 50 TB volume, need to retain 20 copies for long term backup. Even if change rate is very low and actual bits after deduplication and compression of 20 copies is 60 TB, we cannot provision 20x 50 TB volumes, or a 1 PB volume. Which makes the maximum workload size around 3TB if long term retention requires 20 recovery points. 3TB is way too small of a limit for enterprise clients who simply want to use Azure for long term backup where a single backup file is 10-200 TB.

- The specific implementation of the backup catalog and who (the backup software versus StorSimple Manager service) has it.

- Single unified tool for backup/recovery – now we have to use the backup software and StorSimple Manager, which do not communicate and are not aware of each other

- Granular recoveries (single file/folder). Currently to recover a single file from snapshot, we must clone the entire volume.

In this article published 6 December 2016, Microsoft lays out their reference architecture for using StorSimple 8k device as a Primary Backup Target for Veeam

There’s a number of best practices relating to how to configure Veeam and StorSimple in this use case, such as disabling deuplication, compression, and encryption on the Veeam side, dedicating the StorSimple device for the backup workload, …

The interesting part comes in when you look at scalability. Here’s Microsoft’s listed example of a 1 TB workload:

This architecture suggests provisioning 5*5TB volumes for the daily backups and a 26TB volume for the weekly, monthly, and annual backups:

This 1:26 ratio between the Primary Data Set and Vol6 used for the weekly, monthly, and annual backups suggests that the maximum supported Primary Data Set is 2.46 TB (maximum volume size is 64 TB) !!!???

This reference architecture suggests that this solution may not work for a file share that is larger than 2.5TB or may need to be expanded beyond 2.5TB

Furthermore, this reference architecture suggests that the maximum Primary Data Set cannot exceed 2.66TB on an 8100 device, which has 200TB maximum allocatable capacity, reserving 64TB to be able to restore the 64TB Vol6

It also suggests that the maximum Primary Data Set cannot exceed 8.55TB on an 8600 device, which has 500TB maximum allocatable capacity, reserving 64TB to be able to restore the 64TB Vol6

Even if we consider cloud snapshots to be used only in case of total device loss – disaster recovery, and we allocate the maximum device capacity, the 8100 and 8600 devices can accommodate 3.93TB and 9.81TB respectively:

Conclusion:

Although the allocation of 51TB of space to backup 1 TB of data resolves the tiering issue noted above, it significantly erodes the value proposition provided by StorSimple.

Azure, Veeam, and Gridstore, a match made in heaven!?

Microsoft Azure currently provides the best quality public cloud platform available. In a 2013 industry report benchmark comparison of performance, availability and scalability, Azure came out on top in terms of read, and write performance of Blob storage.

Veeam is a fast growing software company that provides a highly scalable, feature-rich, robust backup and replication solution that’s built for virtualized workloads from the ground up including VMWare and Hyper-V virtual workloads. Veeam has been on the cutting edge of backup and replication technologies with unique features like SureBackup/verified protection, Virtual labs, Universal Application Item Recovery, and well-developed reporting. Not to mention a slew of ‘Explorers‘ like SQL, Exchange, SharePoint, and Active Directory Explorer. Veeam Cloud Connect is a feature added in version 8 in December 2014 that allows independent public cloud providers the ability to provide off-site backup for Veeam clients.

Veeam is a fast growing software company that provides a highly scalable, feature-rich, robust backup and replication solution that’s built for virtualized workloads from the ground up including VMWare and Hyper-V virtual workloads. Veeam has been on the cutting edge of backup and replication technologies with unique features like SureBackup/verified protection, Virtual labs, Universal Application Item Recovery, and well-developed reporting. Not to mention a slew of ‘Explorers‘ like SQL, Exchange, SharePoint, and Active Directory Explorer. Veeam Cloud Connect is a feature added in version 8 in December 2014 that allows independent public cloud providers the ability to provide off-site backup for Veeam clients.

Gridstore provides a hardware storage array optimized for Microsoft workloads. At its core, the array is composed of standard rack-mount servers (storage nodes) running Windows OS and Gridstore’s proprietary vController which is a driver that uses local storage on the Gridstore node and presents it to storage-consuming servers over IP.

Gridstore provides a hardware storage array optimized for Microsoft workloads. At its core, the array is composed of standard rack-mount servers (storage nodes) running Windows OS and Gridstore’s proprietary vController which is a driver that uses local storage on the Gridstore node and presents it to storage-consuming servers over IP.

The problem:

Although a single Azure subscription can have 100 Storage Accounts, each can have 500 TB of Blob storage, a single Azure VM can have a limited number of data disks attached to it. Azure VM disks are implemented as Page Blobs which have a 1TB limit as of January 2015. As a result, an Azure VM can have a maximum of 32 TB of attached storage.

Consequently, an Azure VM is currently not fully adequate for use as a Veeam Cloud Connect provider for Enterprise clients who typically need several hundred terabytes of offsite DR/storage.

Possible solution:

If Gridstore is to use Azure VMs as storage nodes, the following architecture may provide the perfect solution to aggregate Azure storage:

(This is a big IF. To my knowledge, Gridstore currently do not offer their product as a software, but only as an appliance)

- 6 VMs to act as Gridstore capacity storage nodes. Each is a Standard A4 size VM with 8 cores, 14 GB RAM, and 16x 1TB disks. I picked Standard A4 to take advantage of a 500 IOPS higher throttle limit per disk as opposed to 300 IO/disk for A4 Basic VM.

- A single 80 TB Grid Protect Level 1 vLUN can be presented from the Gridstore array to a Veeam Cloud Connect VM. This will be striped over 6 nodes and will survive a single VM failure.

- I recommend having a maximum of 40 disks in a Storage Account since a Standard Storage account has a maximum 20k IOPS.

- One A3 VM to be used for Veeam Backup and Replication 8, its SQL 2012 Express, Gateway, and WAN Accelerator. The WAN Accelerator cache disk can be built as a single simple storage space using 8x 50 GB disks, 8-columns providing 480 MB/s or 4K IOPS. This VM can be configured with 2 vNICs which a long awaited feature now available to Azure VMs.

- Storage capacity scalability: simply add more Gridstore nodes. This can scale up to several petabytes.

Azure cost summary:

In this architecture, Azure costs can be summarized as:

That’s $8,419/month for 80 TB payload, or $105.24/TB/month. $4,935 (59%) of which is for Page Blob LRS 96.5 TB raw storage at $0.05/GB/month, and $3,484 (41%) is for compute resources. The latter can be cut in half if Microsoft offers 16 disks for Standard A3 VMs instead of a maximum of 8.

This does not factor in Veeam or Gridstore costs.

Still, highly scalable, redundant, fast storage at $105/TB/month is pretty competitive.

Veeam Cloud Connect on Azure – take 2

2 Months ago in early September 2014, I tested setting up Veeam Cloud Connect on Azure. That was with Veeam version 8 beta 2. Now that Veeam version 8 general availability was November 6th, 2014, I’m revisiting some of the testing with Veeam v8. I’ve also been testing the same with a number of cloud providers who have their own infrastructure. This is helpful to compare performance, identify bottlenecks, and possible issues that may increase or reduce costs.

Summary of steps:

In Azure Management Portal:

- Create a Cloud Service

- Create a Storage Account

- Create a VM: standard A2 with Windows 2012 R2 DC as the OS. Standard A2 is an Azure VM size that comes with 2 (hyperthreaded) processor cores, 3.5 GB of RAM, and up to 4x 1TB page blob disks @ 500 IOPS each. Prior testing with several providers have shown that cloud connect best features such as WAN accelerator need CPU and IOPS resources at the cloud connect provider end.

- Added an endpoint for TCP 6180

- Attached 4x disks to the VM, using max space possible of 1023 GB and RW cache

On the VM:

- I RDP’d to the VM at the port specified under Endpoints/Remote Desktop

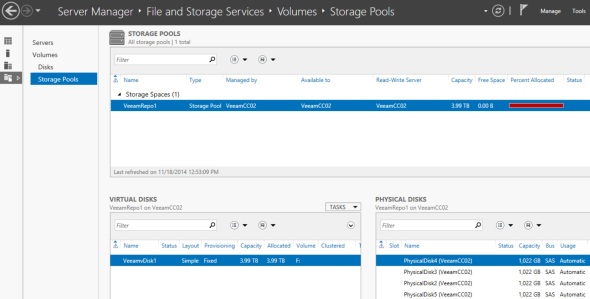

- I ran this small script to create a storage space out of the 4x 1TB disks available:

# Script to create simple disk using all available disks

# This is tailored to use 4 physical disks as a simple space

# Sam Boutros – 11/18/2014 – v1.0

$PoolName = "VeeamRepo1" $vDiskName = "VeeamvDisk1" $VolumeLabel = "VeeamRepo1"

New-StoragePool -FriendlyName $PoolName -StorageSubsystemFriendlyName “Storage Spaces*” -PhysicalDisks (Get-PhysicalDisk -CanPool $True) |

New-VirtualDisk -FriendlyName $vDiskName -UseMaximumSize -ProvisioningType Fixed -ResiliencySettingName Simple -NumberOfColumns 4 -Interleave 256KB |

Initialize-Disk -PassThru -PartitionStyle GPT |

New-Partition -AssignDriveLetter -UseMaximumSize |

Format-Volume -FileSystem NTFS -NewFileSystemLabel $VolumeLabel -AllocationUnitSize 64KB -Confirm:$false

The GUI tools verified successful completion:

This storage space was created to provide best possible performance for Veeam Cloud Connect:

This storage space was created to provide best possible performance for Veeam Cloud Connect:

- Fixed provisioning used instead of thin which is slightly faster

- Simple resiliency – no mirroring or parity, provides best performance. Fault Tolerance will be subject of future designs/tests

- Number of Columns and Interleave size: Interleave * Number Of Columns ==> the size of one stripe. The settings selected make the stripe size 1MB. This will help align the stripe size to the Block size used by Veeam.

- Allocation Unit 64KB for better performance

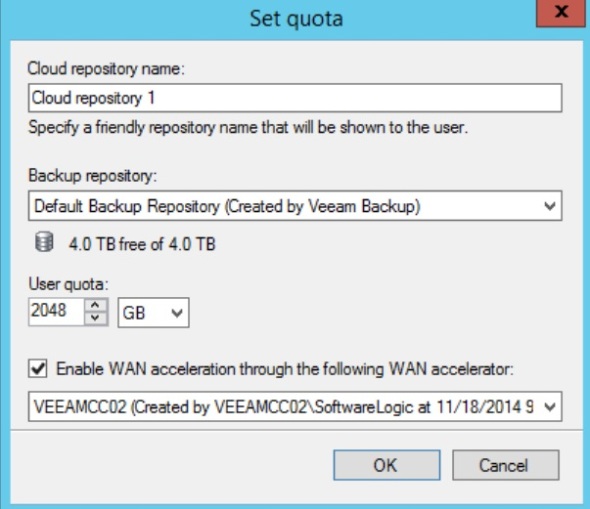

- Installed Veeam 8.0.0.817 Backup and Replication, using the default settings which installed SQL 2012 Express SP1, except that I changed default install location to drive f:\ which is the drive created above

- I ran the SQL script to show the GUI interface as in the prior post (under 11. Initial Veeam Configuration)

- The default backup Repository was already under f:

- Created a WAN Accelerator – cache size 400GB on drive f: created above

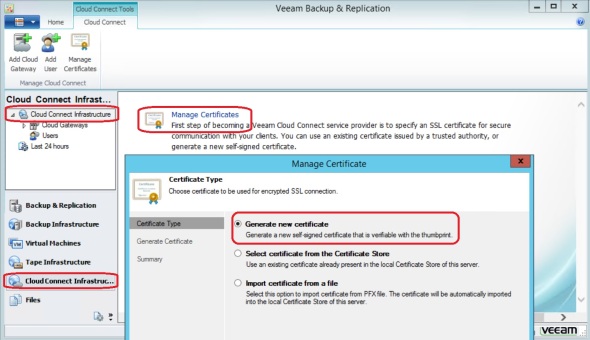

- Installed the additional Cloud Connect License

- Added a self-signed certificate similar to step 14 in the prior post.

- Added a Cloud Gateway

- Added a user/tenant

That’s it. Done setting up Veeam Cloud Connect on the provider side.