Powershell module including functions to report on and migrate IIS6 websites to IIS8.5

This Powershell module includes functions to report on and migrate web sites from IIS version 6 on windows 2003 servers to IIS 8.5 on Windows 2012 R2 server. There has been many improvements in Internet Information Server since version 6. With Windows 2003 end of life looming on the horizon, this module can help migrate web workloads to Windows 2012 R2.

This module can be downloaded from the Microsoft Script Center Repository. This is intended to be installed and used on the target Windows 2012 R2 server. Functions will remote into the source Windows 2003 server(s) to retrieve website files and settings. Powershell remoting on the source Windows 2003 servers needs to be configured as shown in this post.

The Migrate-WebSite function will migrate websites from IIS 6 on Server 2003 to IIS 8.5 on Server 2012 R2. The source server is left intact. No changes are done to the source Windows 2003 server. The script can be run repeatedly on the same source/target web servers. This will refresh/update files and website settings from the source server, and will over-write files/settings on the target server.

This script will handle and migrate:

- Redirected websites

- Websites set on other than typical port 80

- Websites with multiple bindings

- Virtual directories

- HTML, classic ASP, and DotNet websites

This script will NOT migrate SSL certificates

This script is designed to run on the target 2012 R2 web server (Powershell 4). The scriptblocks that interact with Server 2003 have been tested on Powershell 2.

The script will:

- Set NTFS permissions for NetworkService/Modify and IUSR/ReadAndExecute at the $Webroot folder

It will update existing permissions, if any.

I will not downgrade existing permissions - Copy the website files from the source server to this server.

It will make a folder with the same name as the website name under $Webroot

It will copy source files for all virtual folders if any existed - Set application pool & its properties:

Crete application pool $AppPool if it did not exist

Set its .NET CLR version to v2.0

Enable 32-bit applications

Change ‘Generate Process Model Event Log Entry’ identity to ‘Network Service’ - Create web site, or update existing site settings

- Add additional bindings if any existed on the source website

- Update the website’s web.comfig file for DotNet websites

Update any paths to reflect the new website path

Comment out “configSections” in web.config

Benchmarking Azure VM storage

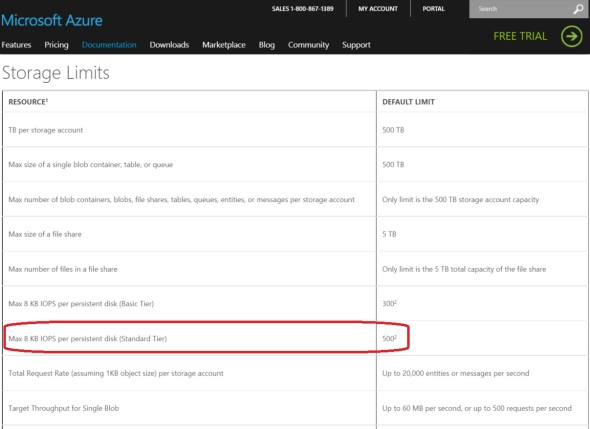

Azure Standard tier virtual machines come with an optional number of persistent page blob disks, up to 1 terabyte each. These disks are expected to deliver 500 IOPS or 60 MB/s throughput each.

Let’s see if we can make that happen.

I started by creating a new Standard Storage account. This is to make sure I’m not hitting the limitation of 20k IOPS/Standard Storage account during testing.

I created a new Standard A3 VM.

From my on-premises management machine, I used Powershell to create and attach 8x 1TB disks to the VM. To get started with Powershell for Azure see this post.

# Input $SubscriptionName = '###Removed###' $StorageAccount = 'testdisks1storageaccount' $VMName = 'testdisks1vm' $ServiceName = 'testdisks1cloudservice' $PwdFile = ".\$VMName-###Removed###.txt" $AdminName = '###Removed###'

# Initialize Set-AzureSubscription -SubscriptionName $SubscriptionName -CurrentStorageAccount $StorageAccount $objVM = Get-AzureVM -Name $VMName -ServiceName $ServiceName $VMFQDN = (Get-AzureWinRMUri -ServiceName $ServiceName).Host $Port = (Get-AzureWinRMUri -ServiceName $ServiceName).Port

# Create and attach 8x 1TB disks 0..7 | % { $_ $objVM | Add-AzureDataDisk -CreateNew -DiskSizeInGB 1023 -DiskLabel "Disk$_" -LUN $_ | Update-AzureVM }

Next I used Powershell remoting to create a Storage Space optimized for 1 MB writes. The intend is to use the VM with Veeam Cloud Connect. This particular workloads uses 1 MB write operations.

This code block connects to the Azure VM:

# Remote to the VM # Get certificate for Powershell remoting to the Azure VM if not installed already Write-Verbose "Adding certificate 'CN=$VMFQDN' to 'LocalMachine\Root' certificate store.." $Thumbprint = (Get-AzureVM -ServiceName $ServiceName -Name $VMName | select -ExpandProperty VM).DefaultWinRMCertificateThumbprint $Temp = [IO.Path]::GetTempFileName() (Get-AzureCertificate -ServiceName $ServiceName -Thumbprint $Thumbprint -ThumbprintAlgorithm sha1).Data | Out-File $Temp $Cert = New-Object System.Security.Cryptography.X509Certificates.X509Certificate2 $Temp $store = New-Object System.Security.Cryptography.X509Certificates.X509Store "Root","LocalMachine" $store.Open([System.Security.Cryptography.X509Certificates.OpenFlags]::ReadWrite) $store.Add($Cert) $store.Close() Remove-Item $Temp -Force -Confirm:$false

# Attempt to open Powershell session to Azure VM Write-Verbose "Opening PS session with computer '$VMName'.." if (-not (Test-Path -Path $PwdFile)) { Write-Verbose "Pwd file '$PwdFile' not found, prompting to pwd.." Read-Host "Enter the pwd for '$AdminName' on '$VMFQDN'" -AsSecureString | ConvertFrom-SecureString | Out-File $PwdFile } $Pwd = Get-Content $PwdFile | ConvertTo-SecureString $Cred = New-Object -TypeName System.Management.Automation.PSCredential -ArgumentList $AdminName, $Pwd $Session = New-PSSession -ComputerName $VMFQDN -Port $Port -UseSSL -Credential $Cred -ErrorAction Stop

Now I have an open PS session with the Azure VM and can execute commands and get output back.

Next I check/verify available disks on the VM:

$ScriptBlock = { Get-PhysicalDisk -CanPool $True } $Result = Invoke-Command -Session $Session -ScriptBlock $ScriptBlock $Result | sort friendlyname | FT -a

Then I create an 8-column simple storage space optimized for 1 MB stripe size:

$ScriptBlock = { $PoolName = "VeeamPool3" $vDiskName = "VeeamVDisk3" $VolumeLabel = "VeeamRepo3" New-StoragePool -FriendlyName $PoolName -StorageSubsystemFriendlyName “Storage Spaces*” -PhysicalDisks (Get-PhysicalDisk -CanPool $True) | New-VirtualDisk -FriendlyName $vDiskName -UseMaximumSize -ProvisioningType Fixed -ResiliencySettingName Simple -NumberOfColumns 16 -Interleave 64KB | Initialize-Disk -PassThru -PartitionStyle GPT | New-Partition -AssignDriveLetter -UseMaximumSize | Format-Volume -FileSystem NTFS -NewFileSystemLabel $VolumeLabel -AllocationUnitSize 64KB -Confirm:$false Get-VirtualDisk | select FriendlyName,Interleave,LogicalSectorSize,NumberOfColumns,PhysicalSectorSize,ProvisioningType,ResiliencySettingName,Size,WriteCacheSize } $Result = Invoke-Command -Session $Session -ScriptBlock $ScriptBlock $Result | FT -a

Next I RDP to the VM, and run IOMeter using the following settings:

- All 4 workers (CPU cores)

- Maximum Disk Size 1,024,000 sectors (512 MB test file)

- # of Outstanding IOs: 20 per target. This is make sure the target disk is getting plenty of requests during the test

- Access specification: 64KB, 50% read, 50% write

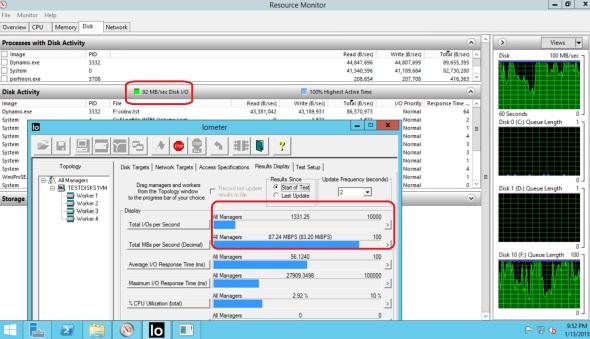

I’m expecting to see 4K IOPS and 320 MB/s throughput. (8 disks x 500 IOPS or 60 MB/s each). But this is what I get:

I got about 1.3 K IOPS instead of 4K, and about 90 MB/s throughput instead of 480 MB/s.

This VM and storage account are in East US 2 region.

This is consistent with other people’s findings like this post.

I also tested using the same 8 disks as a striped disk in Windows. I removed the volume, vDisk, Storage Space, then provisioned a traditional RAID 0 striped disk in this Windows Server 2012 R2 VM. Results were slightly better:

This is still far off the expected 4k IOPS or 480 MB/s I should be seeing here.

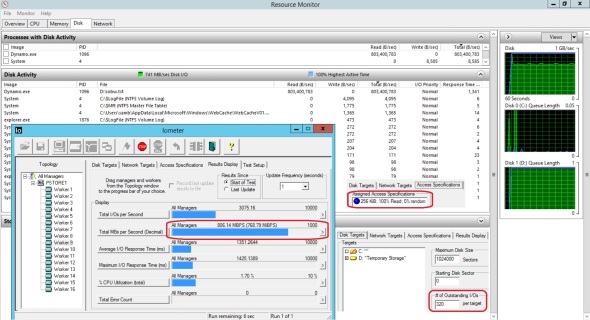

I upgraded the VM to Standard A4 tier, and repeated the same tests:

Standard A4 VM can have a maximum of 16x 1TB persistent page blob disks. I used powershell to provision and attach 16 disks. Then create a storage space with 16 columns optimized for 1 MB stripe:

Then I benchmarked storage performance on drive e: using same exact IOMeter settings as above:

Results are proportionate to the Standard A3 VM test, but they still fall far short.

I’m seeing 2.7 K IOPS instead of the expected 8K IOPS, and about 175 MB/s throughput instead of the expected 960 MB/s

The IOmeter ‘Maximum I/O response Time’ is extremely high (26+ seconds). This has been a consistent finding in all Azure VM testing. This leads me to suspect that the disk requests are being throttled (possibly by the hypervisor).

NJ PowerShell User Group January 8th 2015 Meetup

Yesterday, I attended last evening’s NJ PowerShell User Group January 8th Meetup online. It was attended by a nice group including Jeffrey Snover the godfather of PowerShell, and half a dozen or so MVPs including Joel Bennett, Dave Jones, Trevor Sullivan, Keith Hill, Kirk Munro, Jason Milczek, and Dave Wyatt. The System Center folks may find the first link interesting.

The main subject was PowerShell 5. Interesting links from the meetup include:

- The future of SMA/Orchestrator: A disappointment

- PowerShell 5.0 – Debug Background Jobs (Video)

- Pash Project

- Implementing a .NET Class in PowerShell v5

- PowerShell classes

- ProGet

- Package Management for PowerShell Modules with PowerShellGet

- PowerShell Gallery – This is in preview now

- Helge’s complaints

Powershell script/tool to get OS image information from WIM file

This Script/Function/tool returns information on images contained in a WIM file. For more information on Wim files see this link. WIM file information is available via the Deployment Image Servicing and Management (DISM) Command-Line tool. For more information on Dism.exe see this link.

The DISM tool returns the information as text. This script/function/tool extracts information from the Dism.exe output text and returns a PS Object containing the same information. This makes it easily available for further processing or reporting. Similar information is available from the Get-WindowsImage Cmdlet of the DISM PS module, but this script/tool returns more details.

This script can be downloaded from the Microsoft Script Center Repository.

Example:

Get-WimImage -WimPath H:\sources\install.wim

where h:\sources\install.wim is the path to the WIM file. Output will look like:

Example:

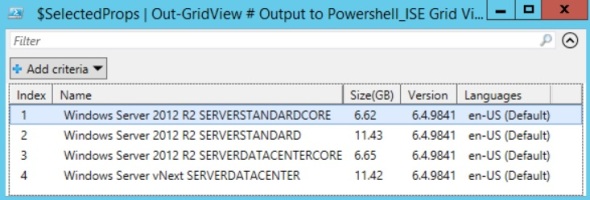

$Images = Get-WimImage H:\sources\install.wim | Select Index,Name,Size,Version,Languages

$Images | FT -a # Output to console

$Images | Out-GridView # Output to Powershell_ISE Grid View

$Images | Export-Csv .\Images.csv -NoType # Export to CSV file

In this example, some properties are selected, and displayed in different formats.

Example:

$DriveLetters = (Get-Volume).DriveLetter

$ISO = Mount-DiskImage -ImagePath E:\Install\ISO\Win10\en_windows_server_technical_preview_x64_dvd_5554304.iso -PassThru

$ISODriveLetter = (Compare-Object -ReferenceObject $DriveLetters -DifferenceObject (Get-Volume).DriveLetter).InputObject

$Images = Get-WimImage “$($ISODriveLetter):\sources\install.wim”

Dismount-DiskImage $ISO.ImagePath

$SelectedProps = $Images | Select Index,Name,

@{N=’Size(GB)’;E={[Math]::Round($_.Size/1GB,2)}},Version,Languages

$SelectedProps | FT -a # Output to console

$SelectedProps | Out-GridView # Output to Powershell_ISE Grid View

$SelectedProps | Export-Csv .\Images.csv -NoType

This example mounts the E:\Install\ISO\Win10\en_windows_server_technical_preview_x64_dvd_5554304.iso file, gets the information of the images it contains, dismounts it, presents that information in different formats, and saves it to CSV file.

Example:

$ISOFolder = ‘e:\install\ISO\win10’

$Images = @()

(Get-ChildItem -Path $ISOFolder -Filter *.iso).FullName | % {

$DriveLetters = (Get-Volume).DriveLetter

$ISO = Mount-DiskImage -ImagePath $_ -PassThru

$ISODriveLetter = (Compare-Object -ReferenceObject $DriveLetters -DifferenceObject (Get-Volume).DriveLetter).InputObject

$Result = Get-WimImage “$($ISODriveLetter):\sources\install.wim”

$Result | Add-Member ‘ISOFile’ (Split-Path -Path $ISO.ImagePath -Leaf)

$Images += $Result

Dismount-DiskImage $ISO.ImagePath

Start-Sleep -Seconds 1

}

$Images | Select ISOFile,Index,Name,

@{N=’Size(GB)’;E={[Math]::Round($_.Size/1GB,2)}},Version,Languages | FT -a

This example aggregates results from several ISO files:

Azure, Veeam, and Gridstore, a match made in heaven!?

Microsoft Azure currently provides the best quality public cloud platform available. In a 2013 industry report benchmark comparison of performance, availability and scalability, Azure came out on top in terms of read, and write performance of Blob storage.

Veeam is a fast growing software company that provides a highly scalable, feature-rich, robust backup and replication solution that’s built for virtualized workloads from the ground up including VMWare and Hyper-V virtual workloads. Veeam has been on the cutting edge of backup and replication technologies with unique features like SureBackup/verified protection, Virtual labs, Universal Application Item Recovery, and well-developed reporting. Not to mention a slew of ‘Explorers‘ like SQL, Exchange, SharePoint, and Active Directory Explorer. Veeam Cloud Connect is a feature added in version 8 in December 2014 that allows independent public cloud providers the ability to provide off-site backup for Veeam clients.

Veeam is a fast growing software company that provides a highly scalable, feature-rich, robust backup and replication solution that’s built for virtualized workloads from the ground up including VMWare and Hyper-V virtual workloads. Veeam has been on the cutting edge of backup and replication technologies with unique features like SureBackup/verified protection, Virtual labs, Universal Application Item Recovery, and well-developed reporting. Not to mention a slew of ‘Explorers‘ like SQL, Exchange, SharePoint, and Active Directory Explorer. Veeam Cloud Connect is a feature added in version 8 in December 2014 that allows independent public cloud providers the ability to provide off-site backup for Veeam clients.

Gridstore provides a hardware storage array optimized for Microsoft workloads. At its core, the array is composed of standard rack-mount servers (storage nodes) running Windows OS and Gridstore’s proprietary vController which is a driver that uses local storage on the Gridstore node and presents it to storage-consuming servers over IP.

Gridstore provides a hardware storage array optimized for Microsoft workloads. At its core, the array is composed of standard rack-mount servers (storage nodes) running Windows OS and Gridstore’s proprietary vController which is a driver that uses local storage on the Gridstore node and presents it to storage-consuming servers over IP.

The problem:

Although a single Azure subscription can have 100 Storage Accounts, each can have 500 TB of Blob storage, a single Azure VM can have a limited number of data disks attached to it. Azure VM disks are implemented as Page Blobs which have a 1TB limit as of January 2015. As a result, an Azure VM can have a maximum of 32 TB of attached storage.

Consequently, an Azure VM is currently not fully adequate for use as a Veeam Cloud Connect provider for Enterprise clients who typically need several hundred terabytes of offsite DR/storage.

Possible solution:

If Gridstore is to use Azure VMs as storage nodes, the following architecture may provide the perfect solution to aggregate Azure storage:

(This is a big IF. To my knowledge, Gridstore currently do not offer their product as a software, but only as an appliance)

- 6 VMs to act as Gridstore capacity storage nodes. Each is a Standard A4 size VM with 8 cores, 14 GB RAM, and 16x 1TB disks. I picked Standard A4 to take advantage of a 500 IOPS higher throttle limit per disk as opposed to 300 IO/disk for A4 Basic VM.

- A single 80 TB Grid Protect Level 1 vLUN can be presented from the Gridstore array to a Veeam Cloud Connect VM. This will be striped over 6 nodes and will survive a single VM failure.

- I recommend having a maximum of 40 disks in a Storage Account since a Standard Storage account has a maximum 20k IOPS.

- One A3 VM to be used for Veeam Backup and Replication 8, its SQL 2012 Express, Gateway, and WAN Accelerator. The WAN Accelerator cache disk can be built as a single simple storage space using 8x 50 GB disks, 8-columns providing 480 MB/s or 4K IOPS. This VM can be configured with 2 vNICs which a long awaited feature now available to Azure VMs.

- Storage capacity scalability: simply add more Gridstore nodes. This can scale up to several petabytes.

Azure cost summary:

In this architecture, Azure costs can be summarized as:

That’s $8,419/month for 80 TB payload, or $105.24/TB/month. $4,935 (59%) of which is for Page Blob LRS 96.5 TB raw storage at $0.05/GB/month, and $3,484 (41%) is for compute resources. The latter can be cut in half if Microsoft offers 16 disks for Standard A3 VMs instead of a maximum of 8.

This does not factor in Veeam or Gridstore costs.

Still, highly scalable, redundant, fast storage at $105/TB/month is pretty competitive.

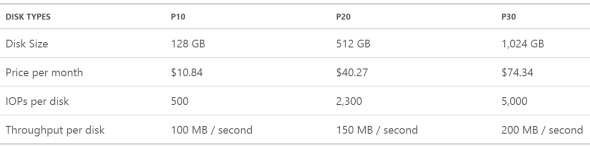

Azure Premium Storage

In December 2014, Microsoft announced the Public Preview Availability release of Azure Premium Storage. Here are the features of Azure Premium Storage (as of January 2015):

Limited Public Preview:

This means you have to sign up for it, and wait to get approved before you can use it.

We also must use the new Azure Portal to manage it. It’s available in West US, East US 2, and West Europe only.

Premium Storage account

This is required for Azure Premium Storage. It has a 32 TB capacity limit, 50K IOPS limit, and can contain Page blobs only.

On the other hand a Standard Storage account has a 500 TB capacity limit, 20k IOPS limit, and can contain both page blobs and block blobs.

Durable/Persistent:

This means it’s available to the Azure VM as it live-migrates from one Hyper-V host to another.

This is unlike the A-Series Azure VM drive ‘d’ which is SAS local temporary storage on the Hyper-V host. It provides a ‘scratch volume’ where we can take advantage of IO operations but data can be lost as the VM is live-migrated from one host to another during planned Azure maintenance or unplanned host failure. The D-Series and DS-Series Azure VMs have SSD local temporary storage drive ‘d’.

SSD:

Solid State Disk based, as opposed to Azure Standard Storage which is SAS spinning hard disk based.

Available to DS series VMs only:

So, we can provision Premium Storage as Page blobs (not block blobs), with the current limitations (maximum size per file is 1,023 GB, must be VHD format not VHDX). Each page blob is a VHD disk file that can be attached to an Azure VM.

Maximum 32 disks per VM:

We can attach up to 32 of these data disks to a single DS-Series VM. For example, using the following Powershell cmdlet:

Get-AzureRoleSize | where { $_.MaxDataDiskCount -gt 15 } |

Select RoleSizeLabel,Cores,MemoryInMB,MaxDataDiskCount | Out-GridView

Redundancy:

Locally Redundant. 3 copies of each block are maintained synchronously in the same Azure facility.

Size, Performance:

- IO/s are calculated based on 256KB block size.

- Throughput limits include both read and write operations. So, for a P10 it’s 100MB/s for both read and write operations combined, not each of read and write operations

- Exceeding disk type maximum IO/s or Throughput will result in throttling, where the VM will appear to freeze.

- Less than 1 ms latency for read operations

- Read-caching is set by default on each disk but can be changed to None or Read-Write using Powershell.

Cost:

The only VM size that allows 32 TB of data disk space is the Standard_D14, which currently costs $,1765/month

Each 1TB Premium Storage disk costs $74/month

This adds up to almost $50k/year for a single VM

That would be a VM with

- 16 CPU cores

- 112 GB RAM

- 32 TB LRS SSD data disk with 6.25 TB/s throughput (160k IOPS)

- 127 GB system/boot disk

- 800 GB SSD local (non-persistent) temporary storage

To put that in perspective relative to the cost of other Azure storage options:

Block Blobs cannot be attached to VMs as disks of course, only Page Blobs can. Also, the D14 size VM is the only one that can have 32 disks currently.

The local SSD temporary non-persistent storage on the D14 VM provides 768 MB/s read throughput

or 384 MB/s write throughput

Script to get website(s) information from IIS 6 on Windows 2003 servers

With Windows Server 2003 end of life coming up in July 14, 2015, many organizations are busy trying to migrate from Server 2003 to Server 2012 R2. This Powershell script/tool will get web site information for one or more web sites from a Windows 2003 Server running IIS 6.

This script is intended to be run from a Windows 8.1 or Server 2012 R2 machine. It has been tested on Server 2012 R2 (Powershell 4). However, the scriptblocks that interact with Server 2003 have been tested on Powershell 2. The script will powershell-remote into the Windows 2003 server to retrieve the website(s) information. For more information on Powershell remoting to Server 2003 from Windows 8.1/Server 2012 R2 see this post.

To use this script, download it from the Microsoft Script Center Repository, unblock the file, and run it like:

.\Get-WebSite.ps1 -WebSite MyWebSite1.com -ComputerName MyW2k3Server -AdminName administrator

The script saves the encrypted admin password for future use. If the file does not exist, the user is prompted for the password:

The script output is a PS Object containing:

- WebSiteName: for example http://www.mywebsite.com or mywebsite.net

- Bindings: each binding will have hostname, IP, and Port properties

- if the host header name is bound to * All Unassigned *, the returned IP will be blank

- Path: local path on web server where the web site resides

- if the web site is redirected in IIS, this field will contain redirection URL

- FileCount: number of files in the web site

- FolderSize: total file size of the web site

- vFolders: each VirtualFolder will have Path, vFolder, FileCount, FolderSize

- This will be blank for redirected websites

- AutoStart: True or False value to indicate whether or not the website is set to auto-start

- ID: internal ID number used by IIS

- LogFolder: local path to IIS logs for this web site

- Server: name of the web server where this web site resides

- SSL: secure binding (if any). It has IP and Port properties

Note:

The output object of this script contains Bindings, SSL, and vFolders properties which are objects having their own properties. The output will not export properly to CSV but can be saved to and retrieved from XML using the Import-Clixml and Export-Clixml cmdlets.

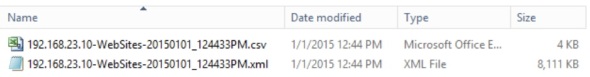

-XML and -CSV are optional parameters of XML and CSV file names. When used, the script will save website raw information in theses files

Example:

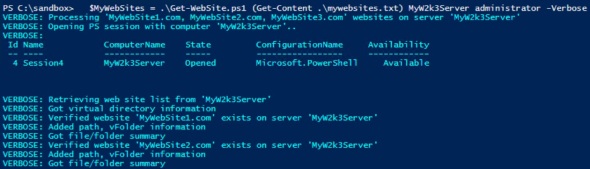

.\Get-WebSite.ps1 -WebSite MyWebSite1.com,MyWebSite2.com -ComputerName MyW2k3Server -AdminName administrator -Verbose

This example returns information about the websites ‘MyWebSite1.com’ and ‘MyWebSite2.com’ on the server ‘MyW2k3Server’. The -Verbose parameter displays progress information.

This example returns information about the websites ‘MyWebSite1.com’ and ‘MyWebSite2.com’ on the server ‘MyW2k3Server’. The -Verbose parameter displays progress information.

Example:

$MyWebSites = .\Get-WebSite.ps1 MyWebSite1.com,MyWebSite2.com MyW2k3Server administrator -Verbose

$MyWebSites | Out-GridView

This example displays the websites’ information in Powershell_ISE Grid View

$MyWebSites| select WebSiteName,ID,Server,FileCount,@{N='Size(MB)'; E={[Math]::Round($_.FolderSize/1MB,0)}} | FT -Auto

This example displays the websites’ information on the console screen

$MyWebSites| % { $_ | select WebSiteName,ID,Server,FileCount,@{N='Size(MB)'; E={[Math]::Round($_.FolderSize/1MB,0)}} | FT -Auto $_.Bindings | FT -Auto $_.vFolders | FT -Auto "-----------------------------------------------------------------------------------------------------" }

This example displays the websites’ information on the console screen, including Bindings and vFolders

Example:

$MyWebSites = .\Get-WebSite.ps1 (Get-Content .\mywebsites.txt) MyW2k3Server administrator -Verbose

$MyWebSites | Out-GridView

This example retieves information about each of the websites listed in the .\mywebsites.txt file and displays it to Powershell_ISE Grid View

Example:

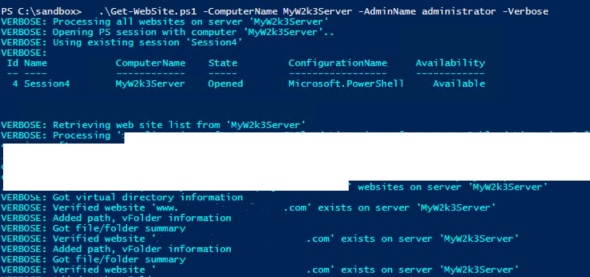

If the -WebSite parameter is not provided the script will retrieve web sites’ information about all websites on the provided server.

.\Get-WebSite.ps1 -ComputerName MyW2k3Server -AdminName administrator -Verbose

This example will retrieve information on all web sites on server ‘MyW2k3Server’

Powershell script to update web.config file

Function/script to update web.config file. The script can be downloaded from the Microsoft Script Center Repository. Script can:

The script will make a backup copy of the web.config file before updating it.

Example:

Update-Web.Config -Destination E:\WebSites\Mywebsite1.com -CommentSection configSections -Verbose

The script comments out the ‘configSections’ section

Example:

Update-Web.Config -Destination E:\WebSites\Mywebsite1.com -OldPath D:\backme.up\websites\www.MyWebsite1.com\www -Verbose

The script replaces all occurrences of the string ‘D:\backme.up\websites\www.MyWebsite1.com\www’ with ‘E:\WebSites\Mywebsite1.com’

Example:

Update-Web.Config -Destination E:\WebSites\Mywebsite1.com -RemoveSection ‘/configuration/configSections’ -Verbose

The script removes ‘/configuration/configSections’ section from web.config file

Example:

Update-Web.Config -Destination E:\WebSites\Mywebsite1.com -OldPath D:\backme.up\websites\www.MyWebsite1.com\www -CommentSection configSections -RemoveSection ‘/configuration/runtime’ -Verbose

The script replaces all occurrences of the string ‘D:\backme.up\websites\www.MyWebsite1.com\www’ with ‘E:\WebSites\Mywebsite1.com’, comments out ‘configSections’ section, and removes ‘/configuration/runtime’ section from web.config file

January 12, 2015 | Categories: Powershell, Windows 2003 Migration | Tags: comment section in web.config, Powershell, remove node from web.config, Update web.config, web.config | 1 Comment