Options for using a Veeam Backup Repository on an Azure Virtual Machine

January 2015 update:

In December 2014, Microsoft announced the Public Preview Availability release of Azure Premium Storage. See this post for details on Azure Premium Storage features. What does that mean in terms of using Azure for a Veeam Backup Repository, or for Veeam Cloud Connect?

- Maximum disk capacity per VM remains a bottleneck at 32 TB.

- Only D14 VM size at this time can have 32x 1TB Page Blob disks. It comes with 16 cores, 112 GB RAM, 127 GB SAS system disk, 800 GB SSD non-persistent temporary drive ‘d’ that delivers 768 MB/s read or 384 MB/s write throughput. Base price for this VM is $1,765/month

- If using 32 Standard (spinning SAS) disks, set as a 16-column single simple storage space for maximum space and performance, we get a 32 TB data disk that delivers 960 MB/s throughput or 8k IOPS (256 KB block size).

- 32x 1TB GRS Standard (HDD) Page Blobs cost $2,621/month

- 32x 1TB LRS Standard (HDD) Page Blobs cost $1,638/month

- If using 32 Premium (SSD) disks, set as a 16-column single simple storage space for maximum space and performance, we get a 32 TB data disk that delivers 3,200 MB/s throughput or 80k IOPS (256KB block size). Premium SSD storage is available as LRS only. The cost for 32x 1TB disks is 2,379/month

- If using a D14 size VM with Cloud Connect, setting up the Veeam Backup and Replication 8, WAN Accelerator, and CC Gateway on the same VM:

- 16 CPU cores provide plenty adequate processing for the WAN Accelerator which is by far the one component here that uses most CPU cycles. It’s also plenty good for SQL 2012 Express used by Veeam 8 on the same VM.

- 112 GB RAM is an overkill here in my opinion. 32 GB should be plenty.

- 800 GB SSD non-persistent temporary storage is perfect for the WAN Accelerator global cache. WAN Accelerator global cache disk must be very fast. The only problem is that it’s non-persistent, but this can be overcome by automation/scripting to maintain a copy of the WAN Accelerator folder on the ‘e’ drive 32 TB data disk or even on an Azure SMB2 share.

- In my opinion, cost benefit analysis of Premium SSD Storage for the 32-TB data disk versus using Standard SAS Storage shows that Standard storage is still the way to go for Veeam Cloud Connect on Azure. It’s $740/month cheaper (31% less) and delivers 960 MB/s throughput or 8k IOPS at 256KB block size which is plenty good for Veeam.

10/20/2014 update:

Microsoft announced a new “Azure Premium Storage”. Main features:

- SSD-based storage (persistent disks)

- Up to 32 TB of storage per VM – This is what’s relevant here. I wonder why not extend that capability to ALL Azure VMs??

- 50,000 IOPS per VM at less than 1 ms latency for read operations

- Not in Azure Preview features as of 10/21/2014. No preview or release date yet.

High level Summary:

Options for using Veeam Backup Repository on an Azure Virtual Machine include:

- Use Standard A4 VM with 16TB disk and about 300 Mbps throughput (VM costs about $6.5k/year)

- Use a small Basic A2 VM with several Azure Files SMB shares. Each is 5 TB, with 1 TB max file size, and 300 Mbps throughput.

Not an option:

- Use CloudBerry Drive to make Azure Block Blob storage available as a drive letter. This was a promising option, but testing showed it fails with files 400 GB and larger. It also has a caching feature that makes it not adequate for this use case.

An Azure subscription can have up to 50 Storage Accounts (as of September 2014), (100 Storage accounts as of January 2015) at 500TB capacity each. Block Blob storage is very cheap. For example, the Azure price calculator shows that 100TB of LRS (Locally Redundant Storage) will cost a little over $28k/year. LRS maintains 3 copies of the data in a single Azure data center.

However, taking advantage of that vast cheap reliable block blob storage is a bit tricky.

Veeam accepts the following types of storage when adding a new Backup Repository:

I have examined the following scenarios of setting up Veeam Backup Repositories on an Azure VM:

1. Locally attached VHD files:

In this scenario, I attached the maximum number of 2 VHD disks to a Basic A1 Azure VM, and set them up as a Simple volume for maximum space and IOPS. This provides a 2TB volume and 600IOPS according to Virtual Machine and Cloud Service Sizes for Azure. Using 64 KB block size:

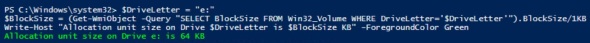

This short script shows block size (allocation unit) for drive e: used:

$DriveLetter = "e:"

$BlockSize = (Get-WmiObject -Query "SELECT BlockSize FROM Win32_Volume WHERE DriveLetter='$DriveLetter'").BlockSize/1KB

Write-Host "Allocation unit size on Drive $DriveLetter is $BlockSize KB" -ForegroundColor Green

This should come to 4.7 MB/s (37.5 Mbps) using the formula

IOPS = BytesPerSec / TransferSizeInBytes

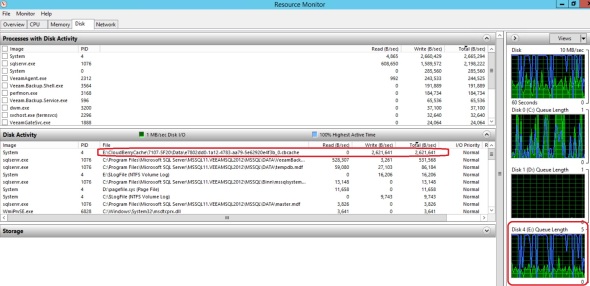

But actual throughtput was about 2.5 MB/s (20 Mbps) as shown on the VM:

and in the Azure Management Portal:

Based on these results, I expect a Standard A4 Azure VM when configured with 16TB simple (striped) disk, with max 8k IOPS will actually deliver about 35 MB/s or 300 Mbps.

2. Using Azure Files:

Azure Files is a new Azure feature that provides SMB v2 shares to Azure VMs with 5TB maximum per share and 1TB maximum per file.

Testing showed that throughput upwards of 100 Mbps. Microsoft suggests that Azure Files throughput is up to 60 MB/s per share.

Although this option provides adequate bandwidth, its main problem is that it has maximum 1 TB file size which means maximum backup job is not to exceed 1 TB which is quite limiting in large environments.

CloudBerry Drive Caching in Azure Virtual Machines and Azure Storage

CloudBerry Drive Server for Windows Server is a tool by CloudBerry that makes cloud storage available on a server as a drive letter. I have examined 10 different tools to perform this task, and CloudBerry drive provided the most functionality. The use case I was after is the ability to upload large files from on-prem servers to Azure VMs. Specifically, I’m testing Veeam Cloud Connect with Azure, which allows for off-site backup to Azure. The backup files are multi-TB each.

However, digging deeper into how CloudBerry drive works showed that CloudBerry Drive caches each received file to a local folder on the VM. According to CloudBerry support this is a must and cannot be turned off. This poses several problems:

- It defeats the purpose of using CloudBerry in the first place. An Azure VM (as of 10/2/2014) can have a maximum of 16 TB of local storage which is implemented as 16x 1TB VHD files (page blobs). The point of using CloudBerry Drive is to be able to access Azure block blob storage with has a 500 TB maximum per storage account.

- It puts a file size limit equivalent to the maximum amount of space on the local drive used for CloudBerry caching.

- CloudBerry Drive then takes the uploaded file from the cache folder and copies it to the Azure block blob storage account.

- This makes the destination file in Azure block blob storage locked and unavailable for many hours during that 2nd copy process. For example, if the Veeam cloud backup job successfully backed up 10 out of 12 VMs, and we retry the remaining 2 VMs, the job will fail since the destination file in Azure is locked by CloudBerry

- The 2nd copy uses a great amount of read IOPS from the local drive (Page Blobs), and write IOPS to the destination Block Blob storage. Which makes any other task on the VM like another backup job not practically possible even if it is a different backup job is using other unlocked files, because CloudBerry is using up all available IOPS on the VM for hours or even days

- The copy incurs transnational, IOPs, and bandwidth charges on an Azure VM unnecessarily

- There are better ways to copy data within the same Azure Storage account that are much more efficient and much less costly, such as instantaneous shadow copies..

Summary:

CloudBerry Drive Server for Windows Server caches files locally which makes it not suitable for use on Azure VMs.

Using CloudBerry Drive to make Azure Block Blob Storage available to Azure Virtual Machines

There’s a number of ways to make Azure storage available to a VM in Azure:

- Attach a number of local VHD disks. There’s a couple of issues with this approach:

- The maximum we can use is 16TB, and

- We’ll have to use an expensive A4 sized VM, that has unneeded RAM and CPU cores.

- Map drives to a number of Azure File SMB shares. There’s a couple of issues with this approach:

- The shares are not persistent although we can use CMDKEY tool as a workaround.

- There’s a maximum of 5TB capacity per share, and a maximum of 1TB capacity per file.

- Use a 3rd party tool such as Cloudberry Drive to make Azure block blob storage available to the Azure VM. This approach has the 500TB Storage account limit which is adequate for use with Veeam Cloud Connect. Microsoft suggests that the maximum NTFS volume size is between 16TB and 256TB on Server 2012 R2 depending on allocation unit size. Using this tool we get 128TB disk suggesting an allocation unit size of 32KB.

To install CloudBerry Drive on an Azure VM:

– Install C++ 2010 x64 Redistributable pre-requisite:

– Run CloudBerryDriveSetup, accept the defaults, and reboot.

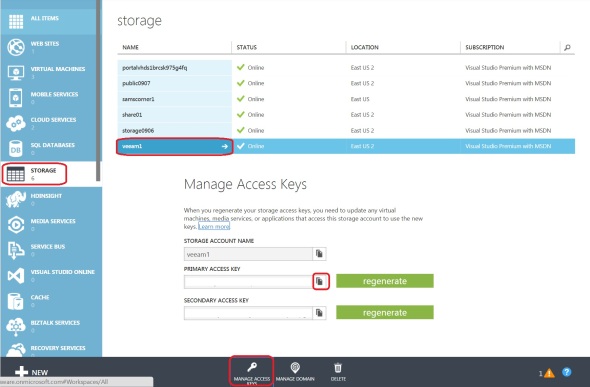

– In the Azure Management Portal, obtain your storage account access key (either one is fine):

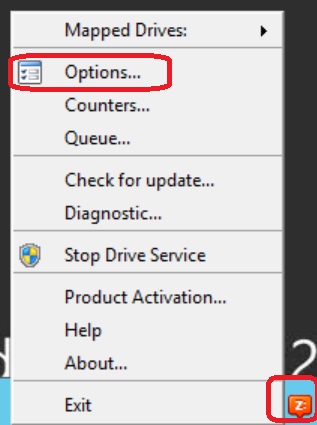

– Back in the Azure VM, right-click on the Cloudberry icon in the system tray and select Options:

– Under the Storage Accounts tab, click Add, pick Azure Blob as your Storage Provider, enter your Azure Storage account name and key:

– Under the Mapped Drives tab, click Add, type-in a volume label, click the button next to Path, and pick a Container. This is the container we created in step 3 above:

– You can see the available volumes in Windows explorer or by running this command in Powershell:

Get-Volume | FT -AutoSize

Add VHD disks to the VM for the CloudBerry Drive cache:

We’ll add VHD disks to the VM for that cache folder to have sufficient disk space and IOPS for the cache.

Highlight the Azure VM, click Attach at the bottom, and click Attach empty disk. Enter a name for the disk VHD file, and a size. The maximum size allowed is 1023 GB (as of September 2014). Repeat this process to add as many disks as allowed by your VM size. For example, an A1 VM can have a maximum of 2 disks, A2 max is 4, A3 max is 8, and A4 max is 16 disks.

In the Azure VM, I created a 2TB disk using Storage Spaces on the VM as shown:

This is setup as a simple disk for maximum disk space and IOPS, but it can be setup as mirrored disks as well.

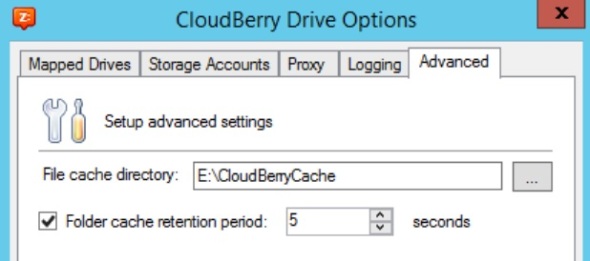

Create a folder for the CloudBerry Drive cache on the new disk, and configure CloudBerry Drive to use it:

It’s important to have enough disk space on the drive where CloudBerry Caching occurs. The amount of available space on the Caching drive puts a limit on the file size that can be handled through CloudBerry drive which could be much less than the 128TB available space on a CloudBerry Drive that has an Azure Block Blob back end.